Multi-Robot System for Load Transport

Senior Capstone Project

May 2019

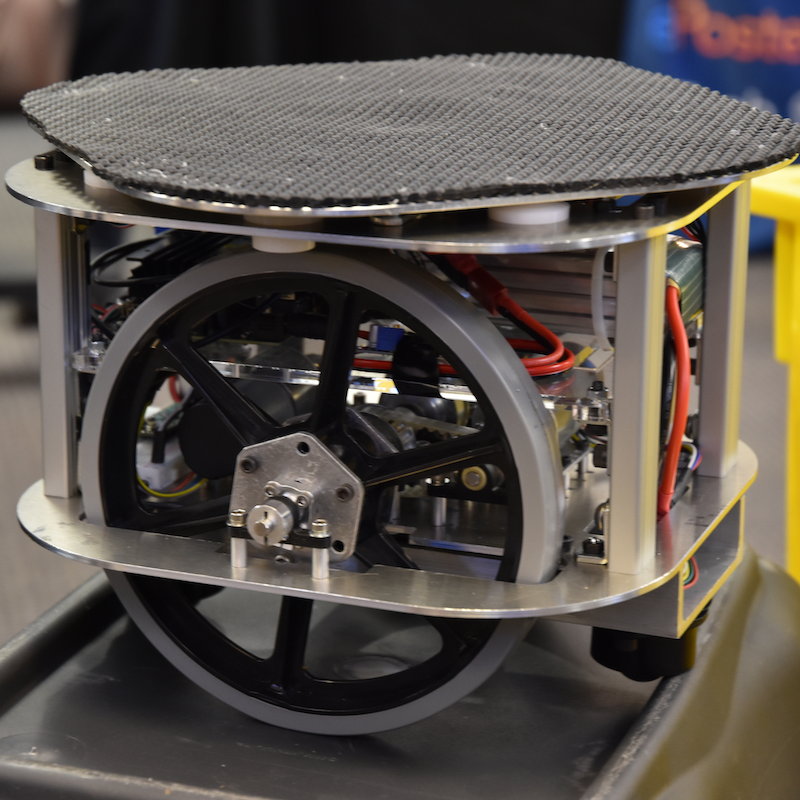

For our senior capstone project, our team developed a multi-robot system (MRS) which may transport an arbitrary-sized load. A formation of agents may be loaded with an object, and then the system may be guided by applying a small push to the object. Independently, each agent measures the force vector and effectively multiplies the pushing force by driving in that direction. This enables a single person to transport a relatively massive object in a warehouse.

Our team of four began this project by surveying warehouse robotics and identifying areas where it may be improved. We decided to create a conceptual design for a robot system, which unlike conventional warehouse robots, would operate interactively with a human worker and could handle an unstructured processes. We presented a technical proposal to the senior R&D team at Amazon Robotics, and received funding to pursue building a prototype.

We successfully built two iterations of prototypes, and with our final prototype we had three functioning robots that could operate in a system. At the end of the term, we won the Most Outstanding Senior Capstone Project in Mechanical Engineering award.

An experimental part of the project considered having agents arrange into a formation autonomously around a perceived object. Perception of the object and relative localization of agents with respect to one another was accomplished with the Intel Realsense D435i.

Immitation Learning Arm

February 2020

Providing motion instructions for a robot arm is difficult. Industrial arms require training or expert knowledge to operate. Other arms, particularly collaborative ones, allow the user to directly position the end effector and define waypoints. However, waypoint following is relatively limited compared to the full motion ability of the arm and doesn't provided an interface for positioning arm joints.

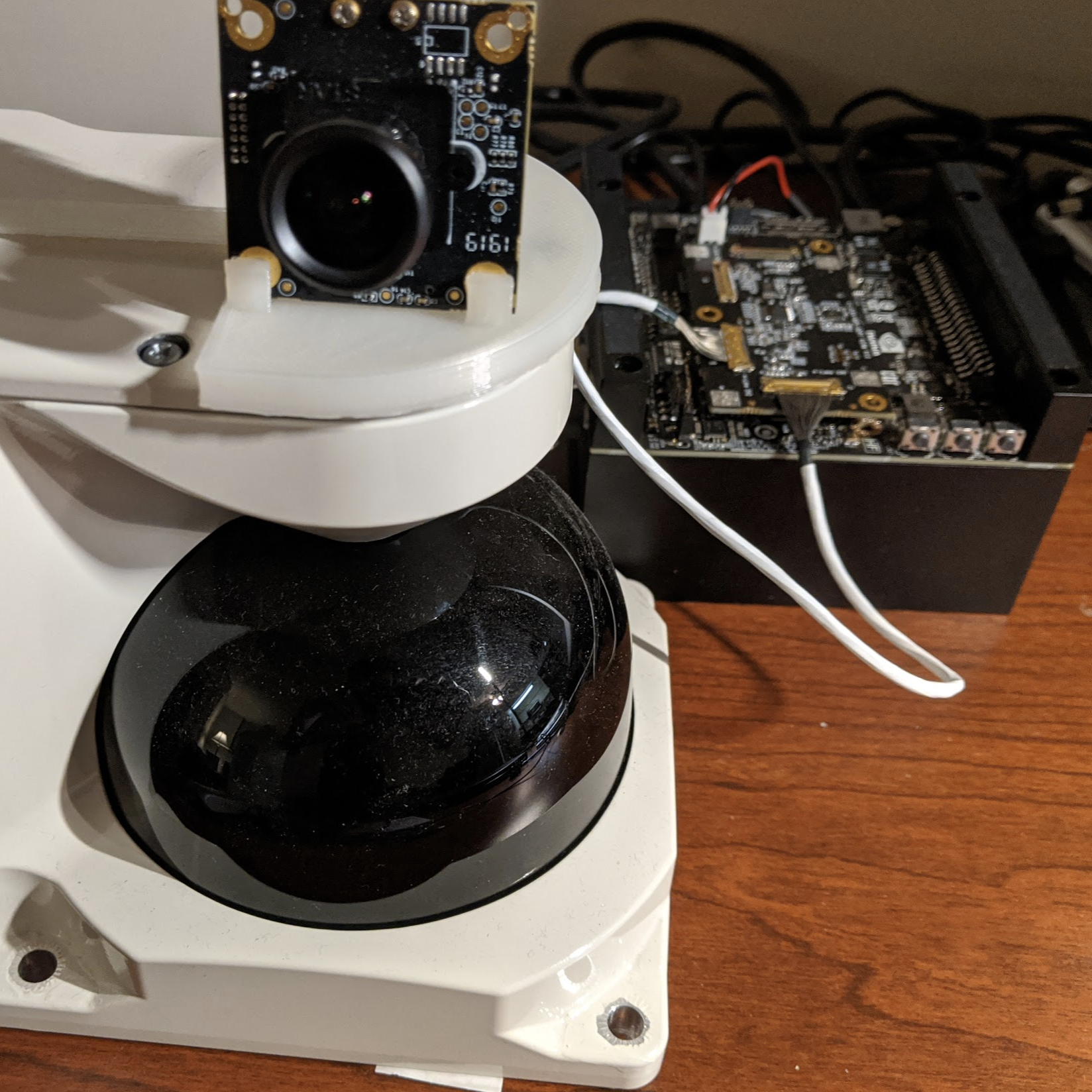

Utilizing a prototype LiDAR unit from Panasonic, we're developing a system by which a human may guide a robot arm by demonstration, providing a framework for immitation learning.

The first stage of this project is having a Sawyer robot arm mimic the motion of a human arm in real-time. By fusing the point cloud from the LiDAR with RGB images, we can reliably estimate the pose of the human arm with an Extended Kalman Filter (EKF). Then, the arm may be commanded with joint angles mapped from joint angles of the human arm. Alternatively, we're experimenting with commanding the x,y,z pose of the end effector and biasing the nullspace with joint angles from the human arm.

Currently, we're using the NVIDIA Xavier for it's performance in inferencing CNNs.

Energy-cost LQR-RRT*

December 2018

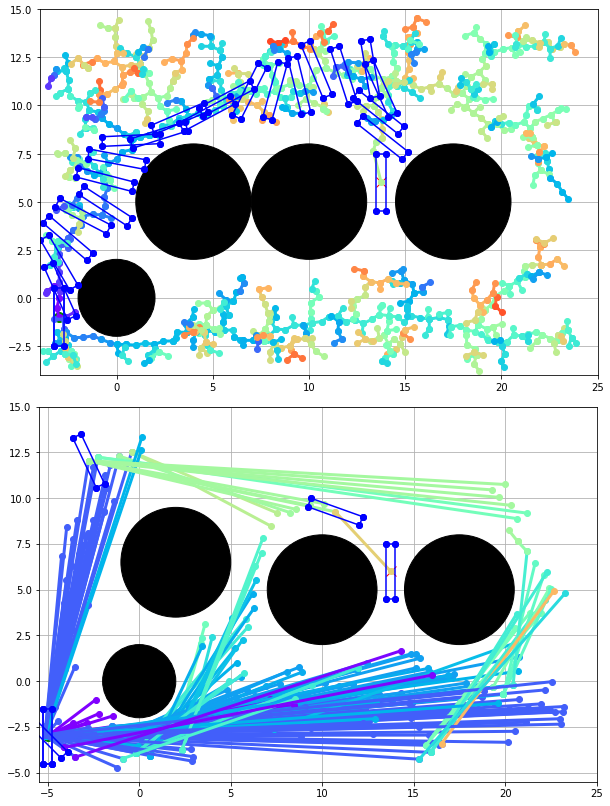

Motivated by the multi-robot system for load transport project, I wanted to penalize energy for a MRS transporting a large object in order to minimize energy consumed by the motors.

Shown in the image are the plans generated by algorithms for a MRS in a rectangle-formation transporting a heavy object with a center of mass aligned with the centroid of the rectangle. The top plot shows RRT with state variables x, y, and theta for the rectangle, and in terms of energy consumption is less than optimal. The bottom plot shows an implementation of LQR-RRT* with a novel cost function that penalizes energy. This implementation uses state variables x, dx, y, dy, theta, and dtheta of the rectangle. The generated plan is much closer to optimal, as the plan doesn't exhibit unnecessary motion.

Fasimov

March 2018

With this project, I wanted to create a robot to study how people respond to a robot recognizing them. To do this, I desgined Fasimov to be able to navigate an environment, recognizing people’s faces, and express emotions.

I wanted to make Fasimov emote in human-like ways without mimicking human features. I first sought to utilize motion in the “head” of the robot. I designed the head such that it could swivel with two degrees-of-freedom, acting like a human neck. In addition to motion, I added RGB lights to the top of the head. A speaker inside the bot allowed me to play sounds or use text-to-speech audio. A ToF depth sensor along with an ultrasonic rangefinder on the front allow the robot to navigate.